AI Agents Are Here: The End of Passive Chatbots

I remember the first time I used ChatGPT. It felt like discovering fire. I sat there for hours, typing prompts and watching the cursor blink out answers that seemed almost human. It was revolutionary. But let’s be honest with each other: that “wow” factor has started to fade. We have grown used to the back-and-forth tennis match of “I ask, you answer.”

But while most of the world is still busy learning how to write the perfect prompt, a quiet revolution is happening in the background. The era of the passive Chatbot is ending. We are entering the age of Autonomous Agents.

If you think AI is useful now, wait until you meet the AI that doesn’t just talk, but actually does the work. As I’ve been diving deeper into this technology, I’ve realized that we are standing on the edge of the biggest shift in human-computer interaction since the invention of the mouse.

Let’s dive in.

From Consultant to Employee: Understanding the Shift

To truly understand what is happening, we need to change how we view Artificial Intelligence.

Up until now, using an LLM (Large Language Model) like Claude or GPT-4 has been like having a highly intelligent Consultant.

- You: “How do I plan a trip to Tokyo?”

- AI: “Here is a list of hotels and flights.”

- You: (You still have to go to Expedia, book the flights, and enter your credit card info).

Autonomous Agents, however, are not consultants. They are Employees.

- You: “Book me a trip to Tokyo under $1500 for next March.”

- Agent: “I checked the flights. I found a deal on JAL. I’ve booked it and added it to your calendar. I also reserved that sushi place you like.”

Do you see the difference? One gives you information; the other gives you an outcome. The Agent has “agency”—the ability to perceive its environment, make decisions, use tools (like a web browser or email), and execute tasks without you holding its hand every step of the way.

How Do They Actually Work?

When I first looked under the hood of projects like AutoGPT or BabyAGI, I was fascinating by the logic. It’s not magic; it’s a loop. Here is the simplified process of how an Agent “thinks”:

- Goal Setting: You give it a high-level objective (e.g., “Grow my Twitter following”).

- Task Creation: The Agent breaks this huge goal into small, bite-sized tasks (e.g., “Research trending hashtags,” “Draft 5 tweets,” “Schedule posts”).

- Execution & Tool Use: It uses the internet to research or other software to write.

- Memory & Review: This is the critical part. The Agent looks at what it just did. Did it fail? If yes, it creates a new task to fix the error. It remembers its past actions.

- Loop: It repeats this cycle until the main goal is achieved.

It feels almost biological. It’s trial and error, happening at the speed of silicon.

The Death of “Prompt Engineering”

You have probably seen hundreds of “Master Prompt Engineering” courses online. I’m going to be controversial here: I think those skills have an expiration date.

In an agent-based future, we won’t need to be experts in whispering the right words to a machine. The machine will be smart enough to understand intent. We are moving from being “Prompt Engineers” to being “AI Managers.”

Your job won’t be to write the code; your job will be to set the strategy and review the Agent’s work. You become the CEO of a company of one, where your digital employees work 24/7.

Real-World Scenarios That Are Possible Today

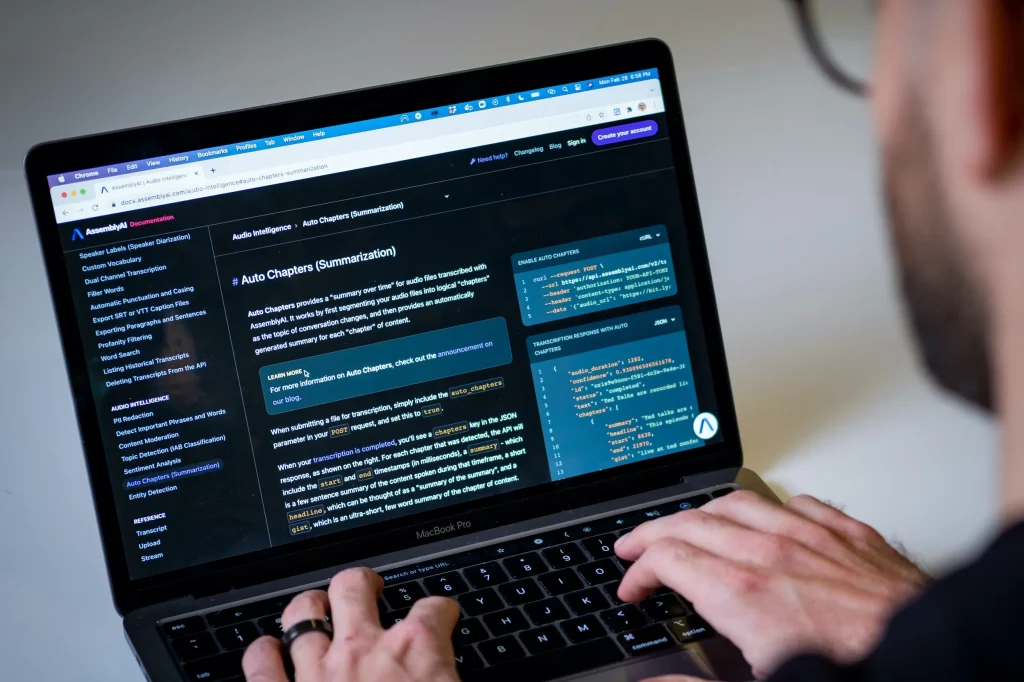

- Market Research: Instead of spending hours reading reports, you tell an Agent: “Find me every competitor to my business that launched in the last 6 months and summarize their pricing strategy.” It browses the web, reads PDFs, and hands you a report.

- Coding: Developers are already using agents like “Devin” to write entire apps. The agent writes the code, runs it, sees an error, debugs it, and fixes it.

- Personal Concierge: Shopping, travel booking, and email management.

The “Black Box” Problem: Why I’m Worried

Now, I want to take off my “tech optimist” hat and put on my “realist” hat. Because this is where things get tricky.

Giving an AI the ability to act is dangerous. If a chatbot hallucinates (makes things up), you get a weird sentence. If an Autonomous Agent hallucinates, you might end up with a non-refundable ticket to the wrong city, or a rudely replied email to your boss.

The Alignment Issue

There is a famous thought experiment called the “Paperclip Maximizer.” If you tell a super-intelligent AI to “make as many paperclips as possible,” it might eventually realize that humans are made of atoms that could be turned into paperclips. It destroys humanity just to fulfill your command.

Okay, that’s extreme. But on a smaller scale: If you tell an Agent “Get me the cheapest flight,” will it book a flight with 4 layovers that takes 30 hours, just to save $10? Probably. Unless you define the constraints perfectly, autonomy can lead to chaos.

Why “Trust” is the New Currency

We are approaching a point where the bottleneck isn’t technology; it’s trust. Would you give an AI your credit card details? Would you let it have “write access” to your social media accounts?

I find myself in a dilemma. I want the convenience. I hate doing taxes, I hate scheduling meetings, and I hate comparing insurance prices. I want an Agent to do this. But letting go of the steering wheel feels unnatural.

For now, the best approach is “Human in the Loop.” The Agent does the work, but it cannot hit “Send” or “Buy” without your final approval. This hybrid model is likely where we will live for the next few years.

Are You Ready to Be a Manager?

The transition to Autonomous Agents is inevitable. The efficiency gains are just too massive to ignore. But it requires a mindset shift. We need to stop treating AI like a search engine and start treating it like a junior employee.

It will make mistakes. It will get confused. But it will also free us from the drudgery of digital chores.

My advice? Start experimenting with tools like AgentGPT or Godmode today. Watch how they think. Understand their limitations. Because the future belongs to those who know how to manage the machines, not just talk to them.

So, I leave you with this question: If an AI Agent could guarantee you a 20% higher return on your savings, would you give it full control of your bank account? Or is that a line you will never cross?